Source: https://root-servers.org/

Current State of DNS Root Servers

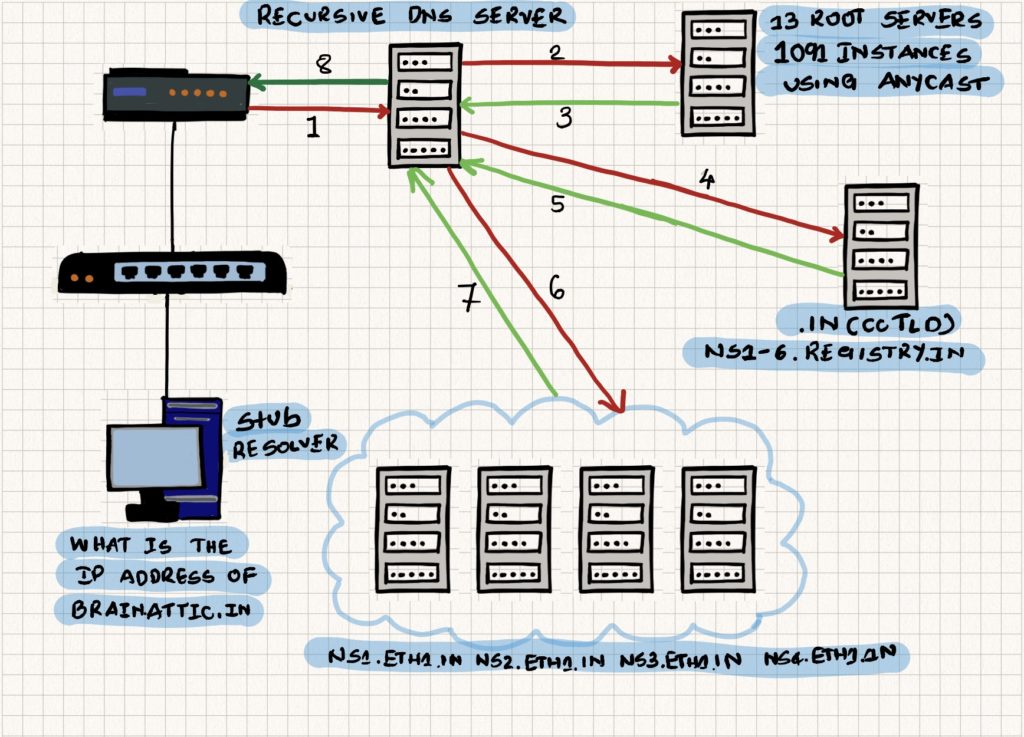

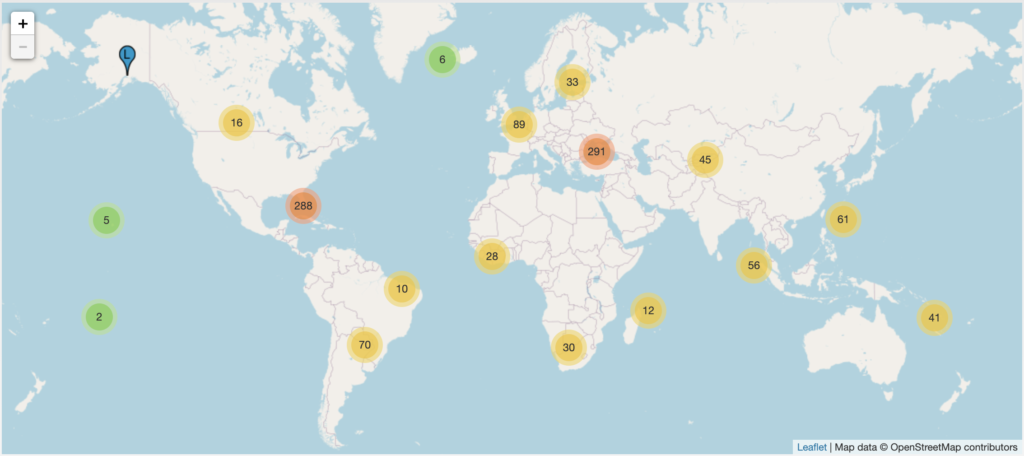

The DNS root server system uses IP Anycast.There are 13 root server operators with a total of 1084 instances all over the world. Let’s look at some of the problems in the context of the root server system,

Decrease the round trip time to the root servers

The round trip time to the root servers is dependent on multiple factors. Availability of a root server instance within the country and optimal routing. While the first can be addressed by installing an instance of the root server in a country, the second one is a bit hard to address. Routing determines whether the traffic to the root server from the last mile will reach the instance which is local or take the transit route to an instance outside the country.

If the traffic is transiting outside the country, the result is increased latency and poor performance in the context of DNS resolution.

Case in point, in the context of India, Netnod which is a root server operator managing the i-root-servers.net has an Ancast IPv4 node in Mumbai.

A traceroute from AS9498 to i.root-servers.net shows that traffic is not hitting the local instance but taking the transit route.

The above image has been taken from a RIPE Atlas measurement.

Similarly, RIPE NCC is the root server operator managing the k.root-servers.net. Again, in the context of India, there is an Anycast node IPv6 node in Mumbai and Noida.

A traceroute from AS9498 to k.root-servers.net shows that traffic is not hitting the local instance but taking the transit route.

The above image has been taken from a RIPE Atlas measurement.

If you aren’t aware of the RIPE Atlas project, check the earlier post

Prevent snooping of queries

In the case of traditional DNS or DNS over 53( Do53), the traffic is unencrypted. In response to the privacy concerns and to secure DNS traffic between the client and the recursive resolver, IETF standardised DNS-over-HTTPS (DoH) and DNS-over-TLS (DoT). While both of the protocols secure the communication between the client and the recursive resolver, traffic between the recursive resolver and the root servers is still in the open i.e unencrypted.

Faster negative responses to queries for non-existent domains

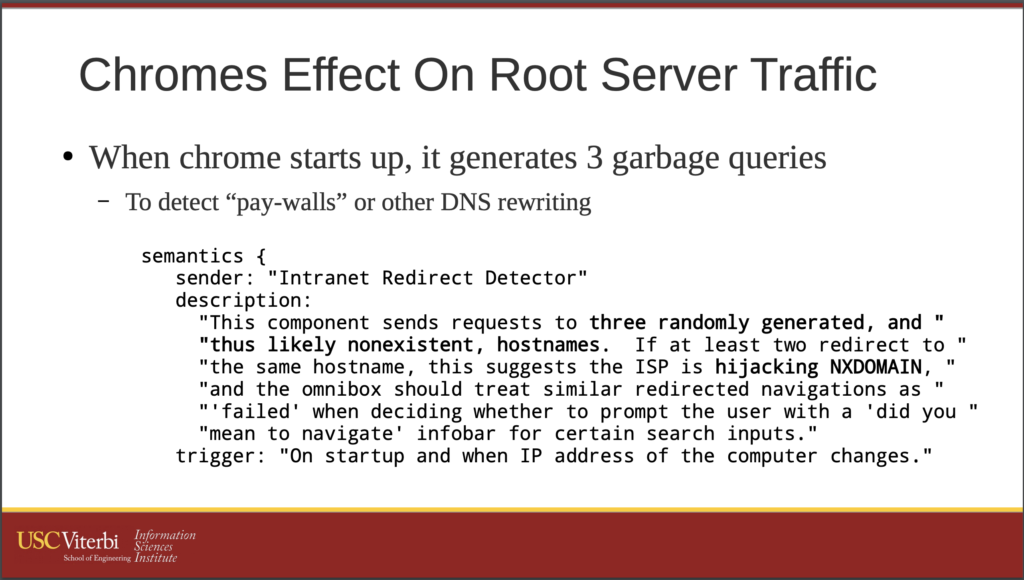

I would like to point you to the earlier posts on Chromium based browsers and DNS, and Junk to the root as that would set the context for this one.

The recent study by ICANN OCTO reveals that a vast majority of the queries to the root servers are for names which do not exist in the root zone. By providing faster negative responses to non existent domains to the stub resolver, eliminate sending the junk queries to the root servers entirely.

Increase the resiliency of the root server system

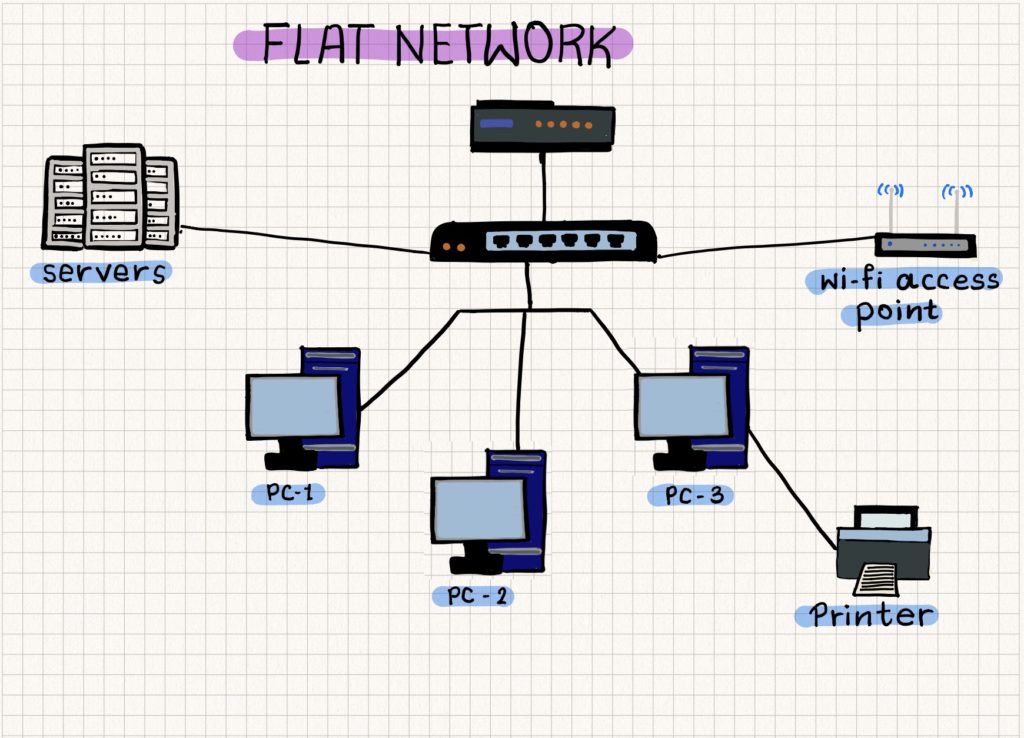

In the context of DNS, the primary intention of using IP Anycast is to have the topologically closest server provide the answer. This model fails if there is suboptimal routing as seen in the examples of traceroute to the root servers earlier.

The additional benefit of using IP Anycast is that considering optimal routing, in the event of a DDoS attack, the impact is limited in effect as it gets confined to certain areas. In the past, IP Anycast has helped to mitigate attacks on the root server system where the attack became limited in scope to certain Anycast instances of the root server and caused a saturation of network connections.

On the contrary, Mirai botnet attack on Dyn Infrastructure also tells us that large scale attack can cause congestion across the Anycast instances resulting in unavailability of services.

Finally, we get to a set of broader questions – How do we increase resiliency against a DDoS on the root server system ? Since the root server system doesn’t penalise abuse (period), should we continue abusing it ?

A probable solution as proposed in RFC 7706 is to run a local copy of the full root zone on the loopback. What this essentially suggests is that the full root zone on the loopback will serve as upstream to the recursive resolver and the recursive resolver should be able to validate the zone from the upstream using DNSSEC.

In order to implement this, one first needs a copy of the root zone. The following root servers currently allow transfer of the root zone using AXFR over TCP,

| Sl. No | Root Server Operator |

| 1 | b.root-servers.net |

| 2 | c.root-servers.net |

| 3 | d.root-servers.net |

| 4 | f.root-servers.net |

| 5 | g.root-servers.net |

| 6 | k.root-servers.net |

| 7 | lax.xfr.dns.icann.org & iad.xfr.dns.icann.org (L-root server) |

The process of manually pulling the root zone has an operational issue – one needs to periodically check if the root zone has changed in the root zone copy at the upstream and then update the copy of the root zone configured to run on the loopback.

Even though RFC 7706 is Informational, recursive resolver software such as ISC BIND, Unbound, Knot Resolver have built-in support.

Slaving of the root zone – ISC BIND 9.16.3(stable)

Part II of this post will contain operational instructions for running a local copy of the root zone and document some of the pitfalls of doing so.