An interesting discussion came up at a nerd dinner :-), where, the argument was that a recursive resolver already knows where the root servers are (root hints ) i.e the IPv4 and IPv6 addresses, so then what is the purpose of running a local copy of the root zone in the recursive resolver aka RFC 8806?

What are the root hints?

The root hints are a list of the servers that are authoritative for the root domain “.”, along with their IPv4 and IPv6 addresses. In other words, this is a collection of NS, A, and AAAA records for the root nameservers.

Source

Coming back to the question, to answer this, let’s look at a few assumptions,

- The root servers are going to be available at all times

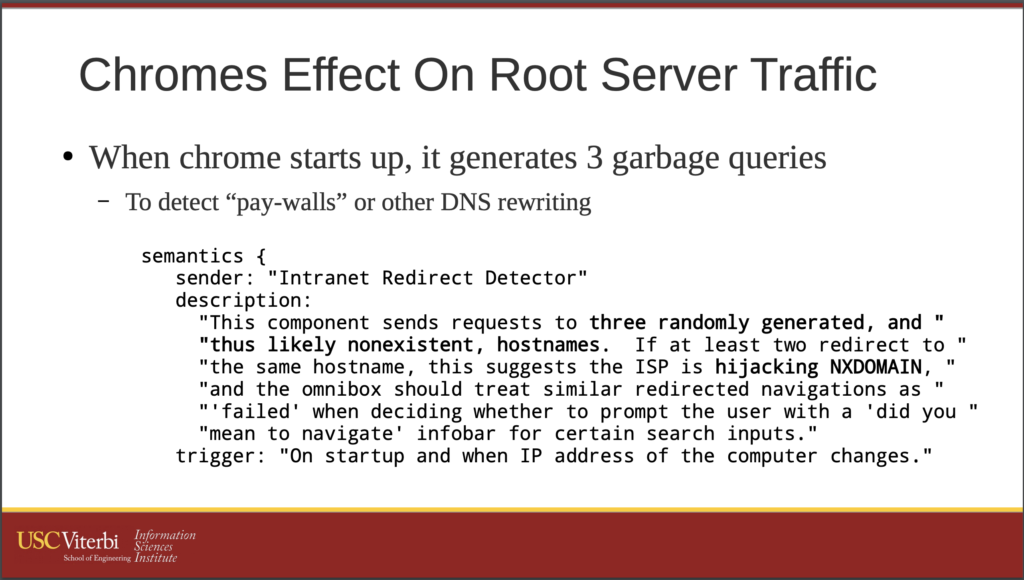

- Even if the DNS query is not for a fully qualified domain name(FQDN), send the query to the root aka send junk to the root

- Because my recursive resolver knows the IPv4 and IPv6 addresses of the root servers, and, there are local instances of the root servers in the country, the DNS query to the root will hit the local instance

All the above assumptions are the reason recursive resolvers must embrace RFC 8806. For now, let’s focus on the third point because just knowing the IP addresses of the root servers is not enough. Packets need to traverse to the right address.

Aye Aye BGP!

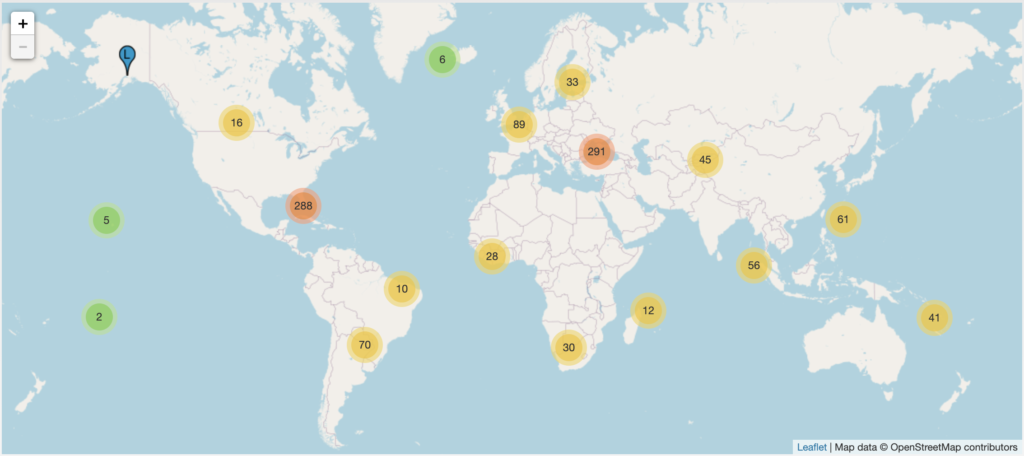

Even if there’s a local root server instance in your country and your recursive resolver knows its IP address (from the root.hints file), the actual query might still transit outside the country due to BGP path selection inefficiencies. Case in point.

All root servers use IP anycast, which means:

- The same IP address (e.g., for F-root:

192.5.5.241) is announced by multiple physical servers worldwide. - BGP determines which instance your resolver talks to, based on routing.

So even if:

- A root server instance is in your country,

- Your resolver queries

192.5.5.241(from root hints),

…it could still be routed to another country, depending on how BGP steers the traffic at that time.

RFC 8806 suggests keeping a local copy of the root zone, which allows the resolver to:

- Provide the same referrals the root server would give, but from memory/disk instead of waiting for a network response.

A recursive resolver with a local copy of the root zone is shaving off the round-trip time(RTT) to the root servers entirely, thereby eliminating any inefficiency in BGP routing. This may not mean much in low-traffic environments, but if you are an ISP/network operator, shaving even 20–50ms per resolution adds up.

Despite high TTLs on DNS A and AAAA records, a local root zone is especially beneficial during a cold start, when the resolver has no cached data.

Stop sending junk DNS queries to core Internet infrastructure

While it may not be possible to fix all software and ensure full RFC compliance, we can certainly put measures in place to mitigate unwanted or intentional or unintentional abuse of core Internet infrastructure.

An added benefit? It also eliminates junk traffic to the root servers. From a make-the-internet-better perspective, that’s a net win!

Recommended reading

If you found this blog post useful, you might find RBI Cyber Security policy .bank.in and .fin.in interesting.